AudioTrack音频输出组件

概述

该组件主要实现音频输出对外的接口,它实际会使用到AudioSystem和AudioFlinger两个模块一起完成

音频输出的任务。

AudioTrack工作流程(C++层)

初始化AudioTrack实例

AudioTrack::AudioTrack( int streamType, uint32_t sampleRate, int format,

int channelMask, int frameCount, uint32_t flags, callback_t cbf,

void* user, int notificationFrames, int sessionId)

: mStatus(NO_INIT)

{

mStatus = set(streamType, sampleRate, format, channelMask,

frameCount, flags, cbf, user, notificationFrames,

0, false, sessionId);

}

设置音频输出参数和创建I/O句柄

status_t AudioTrack::set( int streamType, uint32_t sampleRate, int format,

int channelMask, int frameCount, uint32_t flags, callback_t cbf,

void* user, int notificationFrames, const sp<IMemory>& sharedBuffer,

bool threadCanCallJava, int sessionId)

{

......

// 通过AudioSystem获取音频相关的参数设置

int afSampleRate;

if (AudioSystem::getOutputSamplingRate(&afSampleRate, streamType) != NO_ERROR) {

return NO_INIT;

}

uint32_t afLatency;

if (AudioSystem::getOutputLatency(&afLatency, streamType) != NO_ERROR) {

return NO_INIT;

}

// 对默认的设置作处理

if (streamType == AUDIO_STREAM_DEFAULT) {

streamType = AUDIO_STREAM_MUSIC;

}

if (sampleRate == 0) {

sampleRate = afSampleRate;

}

if (format == 0) {

format = AUDIO_FORMAT_PCM_16_BIT;

}

if (channelMask == 0) {

channelMask = AUDIO_CHANNEL_OUT_STEREO;

}

// 验证格式是否正确

if (!audio_is_valid_format(format)) {

return BAD_VALUE;

}

if (!audio_is_linear_pcm(format)) {

flags |= AUDIO_POLICY_OUTPUT_FLAG_DIRECT;

}

if (!audio_is_output_channel(channelMask)) {

return BAD_VALUE;

}

uint32_t channelCount = popcount(channelMask);

// 获取一个StreamOutput的对象句柄,之后作为参数传递给createTrack_l方法

audio_io_handle_t output = AudioSystem::getOutput(

(audio_stream_type_t)streamType,

sampleRate,format, channelMask,

(audio_policy_output_flags_t)flags);

if (output == 0) {

return BAD_VALUE;

}

......

// 创建IAudioTrack接口,该接口由TrackHandle实现,

// 参见AudioFlinger一节

status_t status = createTrack_l(streamType, sampleRate,

(uint32_t)format, (uint32_t)channelMask,

frameCount, flags, sharedBuffer,

output, true);

......

// 创建一个播放的工作线程,为音频输出做准备

if (cbf != 0) {

mAudioTrackThread = new AudioTrackThread(*this, threadCanCallJava);

if (mAudioTrackThread == 0) {

return NO_INIT;

}

}

......

return NO_ERROR;

}

创建IAudioTrack对象

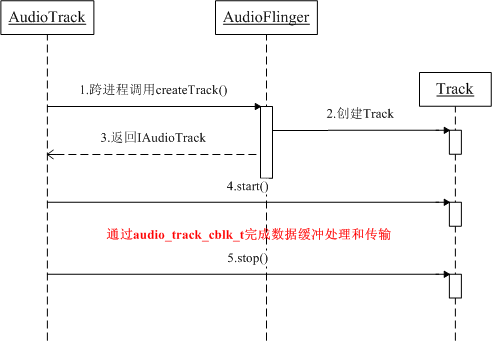

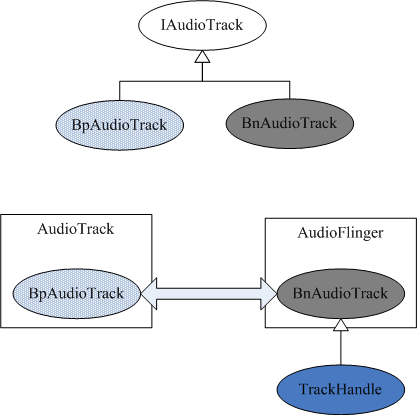

- AudioTrack和AudioFlinger的关系可参考下图

status_t AudioTrack::createTrack_l(

int streamType,

uint32_t sampleRate,

uint32_t format,

uint32_t channelMask,

int frameCount,

uint32_t flags,

const sp<IMemory>& sharedBuffer,

audio_io_handle_t output,

bool enforceFrameCount)

{

// 通过AudioSystem获取IAudioFlinger接口,实际的任务由该接口完成

status_t status;

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

if (audioFlinger == 0) {

return NO_INIT;

}

// 获取一些输出的参数

int afSampleRate;

if (AudioSystem::getOutputSamplingRate(&afSampleRate, streamType) != NO_ERROR) {

return NO_INIT;

}

int afFrameCount;

if (AudioSystem::getOutputFrameCount(&afFrameCount, streamType) != NO_ERROR) {

return NO_INIT;

}

uint32_t afLatency;

if (AudioSystem::getOutputLatency(&afLatency, streamType) != NO_ERROR) {

return NO_INIT;

}

......

// 调用AudioFlinger的createTrack方法

sp<IAudioTrack> track = audioFlinger->createTrack(getpid(),

streamType,

sampleRate,

format,

channelMask,

frameCount,

((uint16_t)flags) << 16,

sharedBuffer,

output,

&mSessionId,

&status);

......

// 由AudioFlinger创建一块共享内存,再分配到AudioTrack对象中

sp<IMemory> cblk = track->getCblk();

if (cblk == 0) {

return NO_INIT;

}

mAudioTrack.clear();

mAudioTrack = track;

mCblkMemory.clear();

mCblkMemory = cblk;

mCblk = static_cast<audio_track_cblk_t*>(cblk->pointer());

android_atomic_or(CBLK_DIRECTION_OUT, &mCblk->flags);

if (sharedBuffer == 0) {

mCblk->buffers = (char*)mCblk + sizeof(audio_track_cblk_t);

} else {

mCblk->buffers = sharedBuffer->pointer();

// Force buffer full condition as data is already present in shared memory

mCblk->stepUser(mCblk->frameCount);

}

mCblk->volumeLR = (uint32_t(uint16_t(mVolume[RIGHT] * 0x1000)) << 16) | uint16_t(mVolume[LEFT] * 0x1000);

mCblk->sendLevel = uint16_t(mSendLevel * 0x1000);

mAudioTrack->attachAuxEffect(mAuxEffectId);

mCblk->bufferTimeoutMs = MAX_STARTUP_TIMEOUT_MS;

mCblk->waitTimeMs = 0;

mRemainingFrames = mNotificationFramesAct;

mLatency = afLatency + (1000*mCblk->frameCount) / sampleRate;

return NO_ERROR;

}

创建AudioTrackThread

- 在初始化最后阶段,AudioTrack会创建一个AudioTrackThread对象,该对象是一个线程类。

- 通常将AudioTrack的this指针作为参数传递给AudioTrackThread对象,以便在线程开启时,执行数据处理的方法。

// 构造方法的第一个参数标识了AudioTrack对象

AudioTrack::AudioTrackThread::AudioTrackThread(AudioTrack& receiver, bool bCanCallJava)

: Thread(bCanCallJava), mReceiver(receiver)

{

}

// 线程的执行方法会调用mReciver的processAudioBuffer方法处理缓冲的数据

bool AudioTrack::AudioTrackThread::threadLoop()

{

return mReceiver.processAudioBuffer(this);

}

开始音频输出处理

-

通常在创建了AudioTrack并设置了参数后,会调用start方法进行音频输出处理,该方法主要有以下作用。

- 开启本地的AudioTrackThread线程,用于pull方式。

-

调用IAudioTrack接口的start方法,用于push方式。

- 最终执行Track类的start方法通知底层输出数据。

void AudioTrack::start()

{

sp<AudioTrackThread> t = mAudioTrackThread;

status_t status = NO_ERROR;

......

AutoMutex lock(mLock);

// 获取IAudioTrack接口

sp <IAudioTrack> audioTrack = mAudioTrack;

sp <IMemory> iMem = mCblkMemory;

// 获取缓冲区对象

audio_track_cblk_t* cblk = mCblk;

if (mActive == 0) {

......

// 执行AudioTrackThread线程

if (t != 0) {

t->run("AudioTrackThread", ANDROID_PRIORITY_AUDIO);

} else {

setpriority(PRIO_PROCESS, 0, ANDROID_PRIORITY_AUDIO);

}

if (!(cblk->flags & CBLK_INVALID_MSK)) {

cblk->lock.unlock();

// 调用IAudioTrack::start方法

status = mAudioTrack->start();

cblk->lock.lock();

......

}

......

}

......

}

status_t AudioFlinger::PlaybackThread::Track::start()

{

status_t status = NO_ERROR;

sp<ThreadBase> thread = mThread.promote();

if (thread != 0) {

Mutex::Autolock _l(thread->mLock);

int state = mState;

if (mState == PAUSED) {

mState = TrackBase::RESUMING;

} else {

mState = TrackBase::ACTIVE;

}

if (!isOutputTrack() && state != ACTIVE && state != RESUMING) {

thread->mLock.unlock();

// 通知HAL层开始音频输出

status = AudioSystem::startOutput(thread->id(),

(audio_stream_type_t)mStreamType,

mSessionId);

thread->mLock.lock();

......

}

if (status == NO_ERROR) {

// 将当前的Track加入活动列表

PlaybackThread *playbackThread = (PlaybackThread *)thread.get();

playbackThread->addTrack_l(this);

} else {

mState = state;

}

} else {

status = BAD_VALUE;

}

return status;

}

停止音频输出处理

-

播放结束后,还需要调用stop方法,该方法会被析构析函数析调用。

- 最终也会调用Track的stop方法停止输出数据。

void AudioTrack::stop()

{

sp<AudioTrackThread> t = mAudioTrackThread;

......

AutoMutex lock(mLock);

if (mActive == 1) {

mActive = 0;

mCblk->cv.signal();

// 调用IAudioTrack::stop方法

mAudioTrack->stop();

// 清空缓冲播放设置

setLoop_l(0, 0, 0);

mMarkerReached = false;

// 刷新位置以便AudioFlinger停止播放

if (mSharedBuffer != 0) {

flush_l();

}

......

}

......

}

void AudioFlinger::PlaybackThread::Track::stop()

{

sp<ThreadBase> thread = mThread.promote();

if (thread != 0) {

Mutex::Autolock _l(thread->mLock);

int state = mState;

if (mState > STOPPED) {

mState = STOPPED;

// 查找活动的Track

PlaybackThread *playbackThread = (PlaybackThread *)thread.get();

if (playbackThread->mActiveTracks.indexOf(this) < 0) {

reset();

}

}

if (!isOutputTrack() && (state == ACTIVE || state == RESUMING)) {

thread->mLock.unlock();

// 通知HAL层停止音频输出

AudioSystem::stopOutput(thread->id(),

(audio_stream_type_t)mStreamType,

mSessionId);

thread->mLock.lock();

......

}

}

}

音频数据处理(pull方式)

-

当使用pull方式时,AudioTrackThread才真正起作用,

mCbf是一个callback_t类型的回调,EVENT_MORE_DATA标识表示需要从用户那里获取数据。

// pull方式的回调

typedef void (*callback_t)(int event, void* user, void *info);

// 事件类型

enum event_type {

EVENT_MORE_DATA = 0, // 需要更多的数据

EVENT_UNDERRUN = 1, // 表示Audio硬件处于低负荷状态

EVENT_LOOP_END = 2, // 表示已经到达播放终点

EVENT_MARKER = 3, // 数据使用的警戒通知,且只会通知一次

EVENT_NEW_POS = 4, // 数据使用进度通知,以帧为单位

EVENT_BUFFER_END = 5 // 数据全部被消耗

};

- 音频数据的处理通过共享内存来实现,而这个共享对象由AudioFlinger端创建,这个共享对象被抽象为audio_track_cblk_t。

-

音频的数据缓冲区通过

obtainBuffer方法获取,再通过releaseBuffer方法释放。 -

在处理音频数据时需要考虑

underrun状态,该状态是指生产者提供数据的速度跟不上消费者使用数据的速度。一般遇到这种情况的处理方法是暂停输出,等数据准备好后再恢复输出。

bool AudioTrack::processAudioBuffer(const sp<AudioTrackThread>& thread)

{

Buffer audioBuffer;

uint32_t frames;

size_t writtenSize;

......

// 处理underrun的情况

if (mActive && (cblk->framesAvailable() == cblk->frameCount)) {

if (!(android_atomic_or(CBLK_UNDERRUN_ON, &cblk->flags) & CBLK_UNDERRUN_MSK)) {

mCbf(EVENT_UNDERRUN, mUserData, 0);

// server是读位置,frameCount是buffer中的数据总和。

// 当读位置等于数据总和时,表示数据已经用完了。

if (cblk->server == cblk->frameCount) {

mCbf(EVENT_BUFFER_END, mUserData, 0);

}

if (mSharedBuffer != 0) return false;

}

}

// 处理循环结束的情况

while (mLoopCount > cblk->loopCount) {

int loopCount = -1;

mLoopCount--;

if (mLoopCount >= 0) loopCount = mLoopCount;

// 一次循环播放完毕,loopCount表示还剩多少次

mCbf(EVENT_LOOP_END, mUserData, (void *)&loopCount);

}

// 处理阀值阀情况

if (!mMarkerReached && (mMarkerPosition > 0)) {

if (cblk->server >= mMarkerPosition) {

// 如果数据的使用超过警戒值,则通知用户

mCbf(EVENT_MARKER, mUserData, (void *)&mMarkerPosition);

// 只通知一次,因为该值被设置为true

mMarkerReached = true;

}

}

// 处理位置更新

if (mUpdatePeriod > 0) {

while (cblk->server >= mNewPosition) {

// 以帧数为单位,进行通知

mCbf(EVENT_NEW_POS, mUserData, (void *)&mNewPosition);

mNewPosition += mUpdatePeriod;

}

}

......

do {

audioBuffer.frameCount = frames;

// 获取一块可写的缓冲区

status_t err = obtainBuffer(&audioBuffer, waitCount);

if (err < NO_ERROR) {

if (err != TIMED_OUT) {

return false;

}

break;

}

if (err == status_t(STOPPED)) return false;

// 将缓冲区大小除2,以便处理PCM8格式

if (mFormat == AUDIO_FORMAT_PCM_8_BIT && !(mFlags & AUDIO_POLICY_OUTPUT_FLAG_DIRECT)) {

audioBuffer.size >>= 1;

}

// 从用户那里pull数据

size_t reqSize = audioBuffer.size;

mCbf(EVENT_MORE_DATA, mUserData, &audioBuffer);

writtenSize = audioBuffer.size;

// Sanity check on returned size

if (ssize_t(writtenSize) <= 0) {

// The callback is done filling buffers

// Keep this thread going to handle timed events and

// still try to get more data in intervals of WAIT_PERIOD_MS

// but don't just loop and block the CPU, so wait

usleep(WAIT_PERIOD_MS*1000);

break;

}

if (writtenSize > reqSize) writtenSize = reqSize;

if (mFormat == AUDIO_FORMAT_PCM_8_BIT && !(mFlags & AUDIO_POLICY_OUTPUT_FLAG_DIRECT)) {

// 8 to 16 bit conversion

const int8_t *src = audioBuffer.i8 + writtenSize-1;

int count = writtenSize;

int16_t *dst = audioBuffer.i16 + writtenSize-1;

while(count--) {

*dst-- = (int16_t)(*src--^0x80) << 8;

}

writtenSize <<= 1;

}

audioBuffer.size = writtenSize;

// PCM8数据转PCM16

audioBuffer.frameCount = writtenSize/mCblk->frameSize;

frames -= audioBuffer.frameCount;

// 写完后释放缓冲区

releaseBuffer(&audioBuffer);

}

while (frames);

if (frames == 0) {

mRemainingFrames = mNotificationFramesAct;

} else {

mRemainingFrames = frames;

}

return true;

}

音频数据处理(push方式)

- push方式是由用户主动调用write写数据,这相当于数据被push到AudioTrack。

-

在音频数据的处理pull方式中,我们看见了在处理线程中会一直

obtainBuffer,然后再releaseBuffer。这些数据是由回调函数产生。那么在push方式中,就需要向外提供一个write方法,用来获取用户的音频数据。

ssize_t AudioTrack::write(const void* buffer, size_t userSize)

{

if (mSharedBuffer != 0) return INVALID_OPERATION;

if (ssize_t(userSize) < 0) {

return BAD_VALUE;

}

......

ssize_t written = 0;

const int8_t *src = (const int8_t *)buffer;

Buffer audioBuffer;

size_t frameSz = (size_t)frameSize();

do {

// 以帧为单位处理数据

audioBuffer.frameCount = userSize/frameSz;

// 获取空闲的共享内存数据

status_t err = obtainBuffer(&audioBuffer, -1);

if (err < 0) {

// out of buffers, return #bytes written

if (err == status_t(NO_MORE_BUFFERS))

break;

return ssize_t(err);

}

size_t toWrite;

if (mFormat == AUDIO_FORMAT_PCM_8_BIT && !(mFlags & AUDIO_POLICY_OUTPUT_FLAG_DIRECT)) {

// Divide capacity by 2 to take expansion into account

toWrite = audioBuffer.size>>1;

// PCM8转换PCM16

int count = toWrite;

int16_t *dst = (int16_t *)(audioBuffer.i8);

while(count--) {

*dst++ = (int16_t)(*src++^0x80) << 8;

}

} else {

// 通过memcpy拷贝数据

toWrite = audioBuffer.size;

memcpy(audioBuffer.i8, src, toWrite);

src += toWrite;

}

userSize -= toWrite;

written += toWrite;

releaseBuffer(&audioBuffer);

} while (userSize >= frameSz);

return written;

}

音频缓冲区对象

-

首先为了跨进程处理,这个缓冲区需要是一块共享内存,它由audio_track_cblk_t类实现。回到前面“创建IAudioTrack对象”一节,这个缓冲区对象是通过

track->getCblk()获得。 - AudioTrack端称为缓冲区数据的生产者,负责向缓冲区中写数据。AudioFlinger端则是缓冲区数据的消费者,负责读数据。

- 在跨进程的访问中,缓冲区对象同时也考虑了同步问题。

struct audio_track_cblk_t

struct audio_track_cblk_t

{

Mutex lock;

Condition cv; // 两个用于同步的变量

volatile uint32_t user; // 当前写位置(生产者写到什么位置)

volatile uint32_t server; // 当前读位置

uint32_t userBase;

uint32_t serverBase;

void* buffers; // 指向缓冲区的首地址

uint32_t frameCount; // 缓冲区的总大小,以Frame为单位

uint32_t loopStart; // 设置播放的起点

uint32_t loopEnd; // 设置播放的终点

int loopCount; // 循环播放的次数

volatile union {

uint16_t volume[2];

uint32_t volumeLR;

};

uint32_t sampleRate; // 采样率

uint8_t frameSize; // 一个Frame的大小

uint8_t pad1;

uint16_t bufferTimeoutMs;// Maximum cumulated timeout before restarting audioflinger

uint16_t waitTimeMs; // Cumulated wait time

uint16_t sendLevel;

volatile int32_t flags; // 控制标识,表示underrun或overrun状态

audio_track_cblk_t();

uint32_t stepUser(uint32_t frameCount); // 更新写位置

bool stepServer(uint32_t frameCount); // 更新读位置

void* buffer(uint32_t offset) const; // 返回可写空间的起始位置

uint32_t framesAvailable(); // 还剩多少空间可写

uint32_t framesAvailable_l();

uint32_t framesReady(); // 是否有可读数据

bool tryLock();

};

生产者如何使用缓冲区

- 在“音频数据的处理(pull或push方式)”一节中,都会调用AudioTrack::obtainBuffer方法,以该方法为线索,来分析数据是如何生产的。

-

对于生产者来说,缓冲区的使用主要是以下三个步骤:

-

调用

framesAvailable方法,获取当前可写的空间大小。 -

调用

buffer方法,获取当前可写空间的首地址,写入数据。 -

调用

stepUser方法,更新可写位置(被obtainBuffer调用)。

-

调用

AudioTrack::obtainBuffer

status_t AudioTrack::obtainBuffer(Buffer* audioBuffer, int32_t waitCount)

{

AutoMutex lock(mLock);

......

audioBuffer->frameCount = 0;

audioBuffer->size = 0;

// 得到当前可写的空间大小

uint32_t framesAvail = cblk->framesAvailable();

......

if (framesAvail == 0) {

cblk->lock.lock();

goto start_loop_here;

while (framesAvail == 0) {

......

if (!(cblk->flags & CBLK_INVALID_MSK)) {

mLock.unlock();

// 如果没有可写空间,则需要等待

result = cblk->cv.waitRelative(cblk->lock, milliseconds(waitTimeMs));

cblk->lock.unlock();

mLock.lock();

if (mActive == 0) {

return status_t(STOPPED);

}

cblk->lock.lock();

}

......

start_loop_here:

framesAvail = cblk->framesAvailable_l();

}

cblk->lock.unlock();

}

......

// user为可写空间的起始地址

uint32_t u = cblk->user;

uint32_t bufferEnd = cblk->userBase + cblk->frameCount;

if (u + framesReq > bufferEnd) {

framesReq = bufferEnd - u;

}

......

// 调用buffer,得到可写空间的首地址

audioBuffer->raw = (int8_t *)cblk->buffer(u);

active = mActive;

return active ? status_t(NO_ERROR) : status_t(STOPPED);

}

AudioTrack::releaseBuffer

void AudioTrack::releaseBuffer(Buffer* audioBuffer)

{

AutoMutex lock(mLock);

// 更新写位置

mCblk->stepUser(audioBuffer->frameCount);

}

audio_track_cblk_t::framesAvailable

uint32_t audio_track_cblk_t::framesAvailable()

{

Mutex::Autolock _l(lock);

return framesAvailable_l();

}

audio_track_cblk_t::framesAvailable_l

uint32_t audio_track_cblk_t::framesAvailable_l()

{

uint32_t u = this->user; // 当前写者位置

uint32_t s = this->server; // 当前读者位置

if (flags & CBLK_DIRECTION_MSK) {

uint32_t limit = (s < loopStart) ? s : loopStart;

return limit + frameCount - u;

} else {

return frameCount + u - s;

}

}

audio_track_cblk_t::buffer

void* audio_track_cblk_t::buffer(uint32_t offset) const

{

// buffers是缓冲区的起始位置,offset是对于userBase的偏移,这种方式可将

// 缓冲区当作一个环形缓冲来处理

return (int8_t *)this->buffers + (offset - userBase) * this->frameSize;

}

audio_track_cblk_t::stepUser

uint32_t audio_track_cblk_t::stepUser(uint32_t frameCount)

{

uint32_t u = this->user;

// 加上写入的帧数

u += frameCount;

// Ensure that user is never ahead of server for AudioRecord

if (flags & CBLK_DIRECTION_MSK) {

if (bufferTimeoutMs == MAX_STARTUP_TIMEOUT_MS-1) {

bufferTimeoutMs = MAX_RUN_TIMEOUT_MS;

}

} else if (u > this->server) {

u = this->server;

}

// 更新当前写位置的同时,也更新userBase,这样(offset-userBase)就可以回到缓冲区头。

if (u >= userBase + this->frameCount) {

userBase += this->frameCount;

}

// 更新当前写的位置

this->user = u;

return u;

}

消费者如何使用缓冲区

-

在AudioFlinger一节我们介绍了共享内存的创建是在

AudioFlinger::ThreadBase::TrackBase::TrackBase中,而音频数据的消费者实际上是其继承类Track。它主要使用以下三个方法:-

getNextBuffer:通过frameReady得到可读帧数。 -

getBuffer:函数根据可读帧数信息得到可读空间的首地址。 -

releaseBuffer:通过stepServer更新读信息。

-

- 我们以DirectOutputThread的线程处理为线索分析消费者如何使用缓冲区。

AudioFlinger::DirectOutputThread::threadLoop

bool AudioFlinger::DirectOutputThread::threadLoop()

{

......

while (frameCount) {

buffer.frameCount = frameCount;

// 获取可读帧数

activeTrack->getNextBuffer(&buffer);

if (UNLIKELY(buffer.raw == 0)) {

memset(curBuf, 0, frameCount * mFrameSize);

break;

}

memcpy(curBuf, buffer.raw, buffer.frameCount * mFrameSize);

frameCount -= buffer.frameCount;

curBuf += buffer.frameCount * mFrameSize;

// 更新读信息

activeTrack->releaseBuffer(&buffer);

}

......

}

AudioFlinger::PlaybackThread::Track::getNextBuffer

status_t AudioFlinger::PlaybackThread::Track::getNextBuffer(AudioBufferProvider::Buffer* buffer)

{

// 获取audio_track_cblk_t对象

audio_track_cblk_t* cblk = this->cblk();

uint32_t framesReady;

// 音频输出缓冲区大小,单位为帧数

uint32_t framesReq = buffer->frameCount;

......

// 计算有多少帧可读

framesReady = cblk->framesReady();

if (LIKELY(framesReady)) {

uint32_t s = cblk->server;

// 可读的最大位置

uint32_t bufferEnd = cblk->serverBase + cblk->frameCount;

bufferEnd = (cblk->loopEnd < bufferEnd) ? cblk->loopEnd : bufferEnd;

if (framesReq > framesReady) {

framesReq = framesReady;

}

if (s + framesReq > bufferEnd) {

framesReq = bufferEnd - s;

}

// 根据起始位置得到数据缓冲的起始地址

buffer->raw = getBuffer(s, framesReq);

if (buffer->raw == 0) goto getNextBuffer_exit;

buffer->frameCount = framesReq;

return NO_ERROR;

}

getNextBuffer_exit:

buffer->raw = 0;

buffer->frameCount = 0;

return NOT_ENOUGH_DATA;

}

AudioFlinger::ThreadBase::TrackBase::releaseBuffer

void AudioFlinger::ThreadBase::TrackBase::releaseBuffer(AudioBufferProvider::Buffer* buffer)

{

buffer->raw = 0;

mFrameCount = buffer->frameCount;

step();

buffer->frameCount = 0;

}

AudioFlinger::ThreadBase::TrackBase::step

bool AudioFlinger::ThreadBase::TrackBase::step() {

bool result;

audio_track_cblk_t* cblk = this->cblk();

result = cblk->stepServer(mFrameCount);

if (!result) {

mFlags |= STEPSERVER_FAILED;

}

return result;

}

audio_track_cblk_t::stepServer

bool audio_track_cblk_t::stepServer(uint32_t frameCount)

{

if (!tryLock()) {

return false;

}

// 获取当前读位置

uint32_t s = this->server;

s += frameCount;

if (flags & CBLK_DIRECTION_MSK) {

// Mark that we have read the first buffer so that next time stepUser() is called

// we switch to normal obtainBuffer() timeout period

if (bufferTimeoutMs == MAX_STARTUP_TIMEOUT_MS) {

bufferTimeoutMs = MAX_STARTUP_TIMEOUT_MS - 1;

}

// It is possible that we receive a flush()

// while the mixer is processing a block: in this case,

// stepServer() is called After the flush() has reset u & s and

// we have s > u

if (s > this->user) {

s = this->user;

}

}

// 处理读取到末尾的情况

if (s >= loopEnd) {

s = loopStart;

if (--loopCount == 0) {

loopEnd = UINT_MAX;

loopStart = UINT_MAX;

}

}

if (s >= serverBase + this->frameCount) {

serverBase += this->frameCount;

}

// 更新当前读位置

this->server = s;

if (!(flags & CBLK_INVALID_MSK)) {

cv.signal();

}

lock.unlock();

return true;

}